This post is part of a series on the history of computing in sociocultural anthropology.

If we had to pick a moment when computers first appeared as anthropological research tools, it might be the 1962 Wenner-Gren Symposium on “The Uses of Computers in Anthropology.” The proceedings would be published three years later, in a volume edited by Dell Hymes. Over the following decades, as journals featured reviews of computer programs for anthropologists, the symposium regularly served as the inaugural reference.

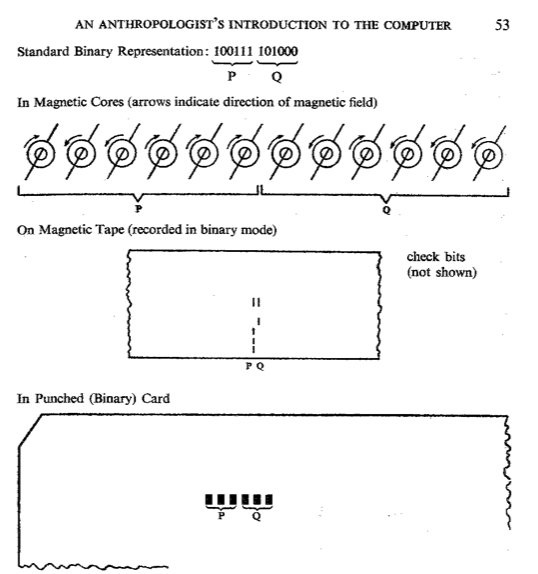

The meeting, which was held at the Foundation’s Austrian castle, emerged from conversations among anthropologists, linguists, and semioticians in and around the Center for Advanced Study in the Behavioral Sciences in Stanford, all of whom had been experimenting with computer-aided methods for their research in folklore, social structure, and linguistic data processing. The symposium itself was interdisciplinary, covering a wide range of topics: from the technical details of computer function, including such minutia as the electromagnetic operations of memory (presented by anthropologists, no less; Romney and Lamb 1965), to numerical methods of ethnological classification (Driver 1965), to a cybernetic model of the eye and observation in silico (“The Machine which Observes and Describes,” Ceccato 1965).

Kim Romney and Sidney Lamb give an “introduction to the computer”

As the range of interests represented at the symposium suggests, the history of computing in anthropology is in large part a history of the relationship between anthropology and other disciplines. These other disciplines were sometimes technical: to do anthropology with computers during the 1960s meant to do anthropology with programmers, and a significant portion of the discussion recounted in the symposium proceedings centers on how much an anthropologist needed to know about programming in order to work effectively with computers and their human handlers. This debate brought up the question of statistical and mathematical analyses as well: should anthropologists be expected do this work themselves? What would remain of anthropology if new forms of analysis continued to be marked as “outside” of proper anthropological work?

Hymes suggested that a failure to engage with computing could cause anthropology to lose “its present status as a peer among the human sciences” (30), as its neighbors took up the formalized, quantitative, model-building methods that were idiomatic to computers but anathema to many cultural anthropologists. He noted the growing popularity of computer-aided research and the attendant increase in funding available for it: “Our society, and the sciences adjacent to anthropology, are such that it seems inevitable that the computer will be used extensively, willy-nilly, so that the choice is only whether in the immediate future the computer will be used well or ill” (1965:29).

When computers were adopted as a tool for research in anthropology, they entered into a set of long-standing debates in anthropology about its scientific standing and the features that distinguished it from the other human sciences. The anthropologists who took most readily to computing during the 1960s were part of the constellation of methods and research interests collected under the name “ethnoscience.” “Ethnoscience” represented a somewhat heterogeneous set of research programs, characterized by an interest in classification systems, formal methods, and cognitive science. If using computers was an inherently interdisciplinary affair, so was ethnoscience, which was strongly inflected by linguistic methods, the “cognitive revolution” in psychology, and a cascading set of “quantitative revolutions” in the other human sciences.

“Ethnoscience” provided a term in which two occasionally antagonistic tendencies could reside: on the one hand, it referred to the study of “local” science, as in “ethnobotany”; on the other, it indicated a “scientific” approach to ethnography itself, characterized by formalized methods of data collection and analysis. Where the former tendency might have opened up ethnoscience to a Boasian relativism, the latter pitched the ethnoscientists as universalizing nomothetes against those who advocated for an anthropology keyed to particularism and effected through the sensitive interpretation of ethnographers. Depending on what aspect was being emphasized or who happened to be doing the work, this research was also variously called cognitive anthropology, componential analysis, ethnosemantics, the new ethnography, or mathematical anthropology.1

Whether and how anthropology could or should be made scientific was a source of some debate (as it remains today). Computers brought together in one tool many of the values of an explicitly scientific anthropology — formalism, necessary explicitness, mathematization, large, comparable datasets — and they served as a potent metaphor. Hortense Powdermaker, a critic of computational approaches, wrote ironically in her memoir Stranger and Friend:

The industrial revolution has hit anthropology! [… but] Computers do not replace men who sniff and hunt for clues and who ponder the imponderables. The danger is, and it has already happened, that problems will be picked, not because of their significance, but because data on them can be programmed for computers. In such a situation, anthropology would be reduced to the work of technicians. (1966:299-300)

This concern — that the computer might be such an intoxicating tool that it warped properly anthropological research questions — was already present in the discussions at the 1962 Wenner-Gren symposium and was hardly controversial. Indeed, the need for “men who sniff and hunt for clues and who ponder the imponderables” remains a favorite topic for critics of computational analyses through to the present day: see, for example, the regular reminders from both proponents and critics of big data that findings do not make themselves and human “insight” or “interpretation” is still crucial.

However, while recognizing the potential perils of a field warped by its tools, Hymes and others at the Wenner-Gren symposium suggested that these risks were outweighed by the positive influence computing might have on how anthropological research questions were posed and answered: “An anthropological theory sufficiently explicit for its consequences to be tested with the aid of the computer broaches frontiers for both” (1963:127). The values of explicitness and formalism (and their corollaries: comparability, reliability, and testable validity) were part of the push toward a more scientific ethnography as well as one that entertained less mystery about its methods than existing practices of ethnographic authority.

What critics like Powdermaker found threatening was not only the formalism and mathematization of computational approaches (there was already a long-term push to mathematize the social sciences that predated computers), but the tireless iterability with which such methods could now be applied. Indeed, there was nothing the computer could do that people could not, given enough time (Tim Ingold would later, fairly accurately, describe the computer as “nothing more, and nothing less, than a box of short-cuts”). However, the speed and reliability of digital computers produced a qualitative change in how such simple calculations were perceived. The computer made formal analyses more practically plausible and provided a sense that they could run “on their own,” increasing the risk that computer-produced findings would be seen as self-sufficient or objective, reducing the anthropologist’s role from interpreter to “technician.”

The last strand to be twisted into this story of mid-century formalism is the growth of large anthropological data sets. At the end of his introduction to the symposium proceedings, Hymes wrote that the future of anthropology depends on increased attention to two things:

the logic and practice of quantitative and qualitative analysis, and the forms of cooperation and integration needed to make our stores of data systematic, comparable, accessible to each other and to theory. These demands, for formalization of analysis, and exchange of data […] are precisely the demands made by efficient use of the computer. The story of the computer in anthropology will be the story of how these two demands are met. (1965:31)

In this charge, Hymes followed an argument that had been advanced by Kroeber and others in the context of statistical methods for comparative analysis: the future of anthropology lay in massive cross-cultural comparisons, facilitated by statistical and computational techniques. To make these comparisons possible, it was necessary to bring ethnographic data together without the idiosyncrasies of collection and presentation that characterized typical ethnography.

The classic example of such a large project is Murdock’s Human Relations Area Files project at Yale. By contemporary standards, these collections are not “big data,” but for the time, they pushed the capabilities of computer systems and shared a common drive with contemporary big data applications: the resolution of research problems by the continued accumulation of data. Rather than solving a problem through the discovery of a simple model or interpretation, this drive places the solution at some point in the future when enough data has been collected to make the pursuit of simple models superfluous.

While ethnoscience and other formalisms seemed briefly poised to take over the mainstream of research in cultural anthropology, the large-scale building of formalized ethnographic datasets failed to materialize. The linguistics on which much ethnoscience had been based had already fallen out of favor with linguists, and researchers failed to produce students interested in carrying on their research projects. With a few exceptions, the project of formalized data acquisition and large-scale comparison of cultural data fell out of favor in mainstream anthropology. Formally eliciting data about very constrained cultural domains in so-called “white room ethnography” was drudgery compared to the exotic glamor and close contact of participant-observation. As Roger Keesing wrote in 1972, “Ethnoscience has almost bored itself to death.”

- In “Thick Description,” Geertz described this variety as “a terminological wavering which reflects a deeper uncertainty” (1973:11). For a more in-depth review see Murray’s “The Dissolution of ‘Classical Ethnoscience’“ ↩

Ethnoscience may be out of fashion. But the movement of which it was part lives on, albeit off the radar of “mainstream” anthropology. While attending the annual Sunbelt conferences of the International Network for Social Network Analysis (INSNA) I have had the opportunity to meet Russ Bernard (http://nersp.osg.ufl.edu/~ufruss/cv.htm). At the last Sunbelt conference, the keynote speaker was anthropologist Jeffrey Johnson (http://www.ecu.edu/cs-cas/soci/jeffrey-johnson.cfm), who spoke on the need to stay close to the data used by social network analysts.

Thanks, John. I’ve been appreciating your responses to these pieces! At my home institution, UC Irvine, we’ve got the Institute for Mathematical Behavioral Sciences, which popped up as the more ethnoscience-like (cog anthro, mathematical anthro) folks left the anthropology department. It’s very important to realize that a lot of the paradigms that mainstream cultural anthropologists think of as “vanquished” haven’t actually disappeared — they’ve just shifted around institutionally, and in many cases, they’ve moved into far more influential spots than “we” occupy. That suggests that we need new, less progressivist/teleological ways to think about our methodological critiques: we are not on some trajectory towards ever better explanations or descriptions (cf. Geertz: “What gets better is the precision with which we vex each other”), and, to snag a line of Latour’s I really like, “the past is not surpassed but revisited, repeated, surrounded, protected, recombined, reinterpreted, reshuffled.” Of course, contemporary folks working in ethnoscience-like disciplines would not like the idea of being called “the past,” and the banishing of other epistemologies to the past should make us think about anthropological critiques of allochronism.

All that is to say that the history of computational approaches in cultural anthropology raises some of our central disciplinary questions and paradoxes, even though formalisms are often dismissed quite casually by contemporary ethnographers.

Nick, my pleasure. Looking forward to the next installment in this series.

Considering that formal modeling of kinship terminological systems was once dismissed by a commenter on this blog as not really anthropology, I appreciate Nick’s comment here.